Results obtained recently show that the architecture based on microservices can be a great option when implementing new features.

This is due to the versatility of microservices, which, despite being relatively new, already present excellent results and their use has been growing exponentially, especially in recent years. With the evolution of the .NET platform, which is currently in its sixth version, implementing microservices has become even simpler.

In this article, we’ll spend a little time defining “microservices” and then we will create a microservice from scratch.

What Are Microservices?

Although there is no exact definition of what microservices are, based on what Martin Fowler, one of the biggest references on the subject today, says, microservices can be used to describe a way to design software applications composed of small sets of services that work and communicate with each other independently, consenting to a single concept. As well as its functioning, the implementation of microservices also happens independently.

Why Microservices?

We can say that microservices are the opposite of monoliths, and there is a lot of discussion about which would be ideal. There are many reasons to use monoliths, Fowler himself is an advocate of monoliths, but let’s focus on the advantages of using microservices.

Microservices make it easier to develop, test and deploy isolated parts of an application. Each microservice can be independently scaled as needed. Your implantation also is simple and does not need to have a dependence on other parts.

Each microservice uses its own database, reserved for its own scope, which avoids the many problems that can arise from two or more systems using the same database.

Obviously, microservices do not solve all problems and also have disadvantages. One of them is the complexity created by dividing a module into several microservices. Despite having some disadvantages, results obtained mainly in recent years show that systems with architectures based on microservices are achieving great results.

ASP.NET Core and Microservices

Like other development platforms, Microsoft has invested heavily to meet the requirements of an architecture based on microservices. Today .NET provides many resources for this purpose.

Microsoft’s official website has a lot of content about microservices-based architecture, including ebooks, tutorials, videos and challenges to help developers work with them.

With .NET 6, developing apps in a microservices architecture became even easier due to the new minimal APIs feature that simplifies many processes that were once mandatory but are now no longer needed.

Practical Approach

In this article, we will create a simple microservice, which performs a request in an API and return this data in the response.

Create the Project

To follow this tutorial, you need to download and install the .NET SDK (Software Development Kit), in version 6.

You can access the full source code at this link: Source Code.

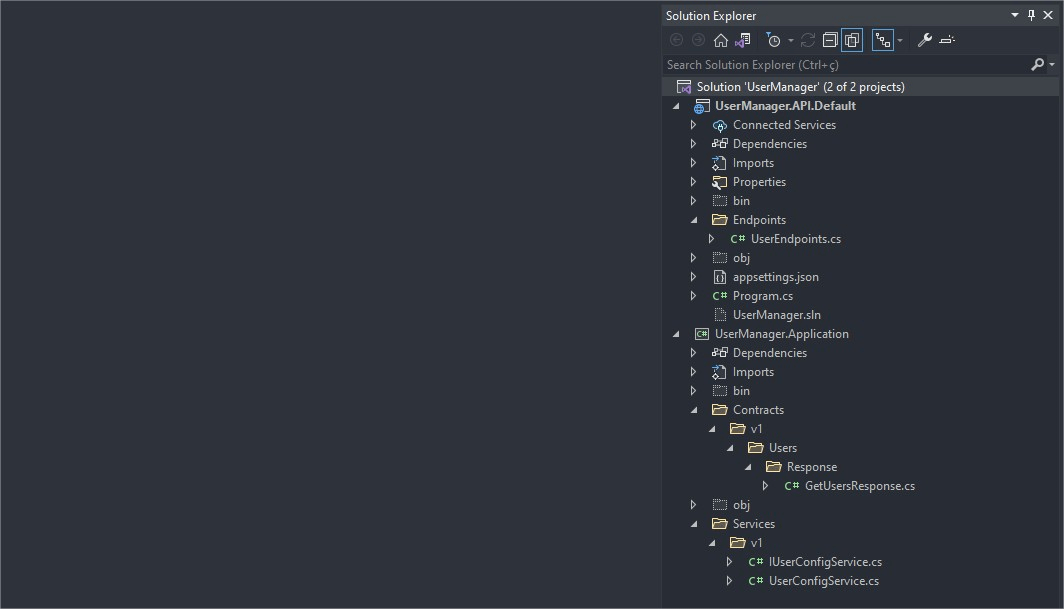

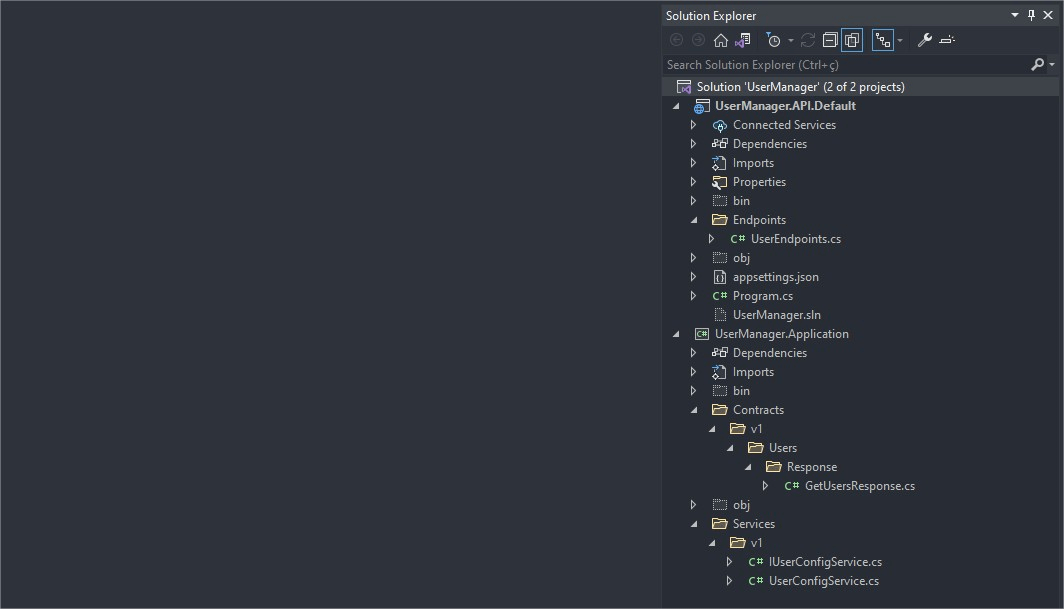

The final structure of the project will be as follows:

In your command prompt, run the following command to create your minimal API project:

dotnet new web -o UserManager -f net6.0

What do these commands mean?

- The “dotnet new web” command creates a new application of type web API (that’s a REST API endpoint).

- The “-o” parameter creates a directory named UserManager where your app is stored.

- The “-f net6.0” command is to inform the .NET version that we will be using.

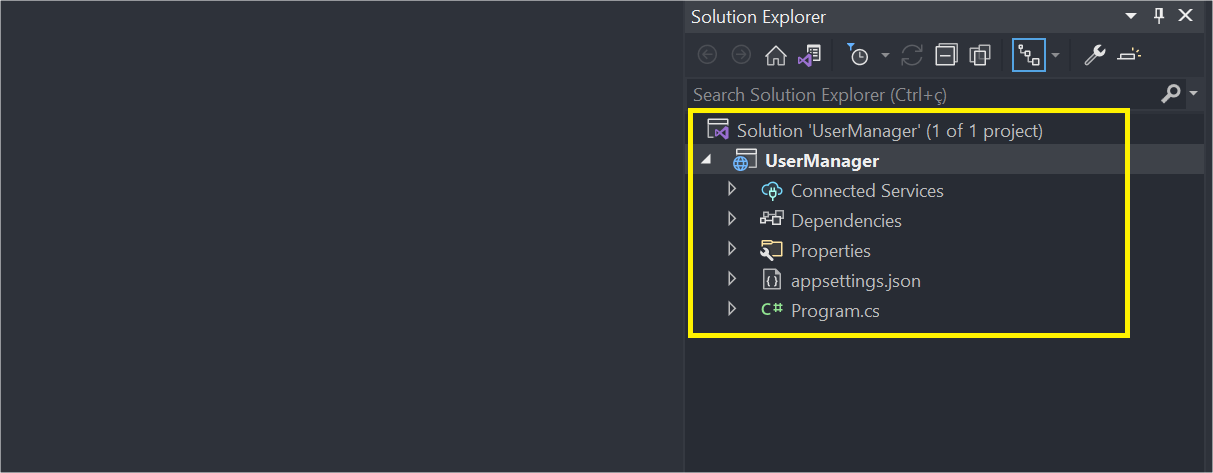

Now open the file “UserManager.csproj” generated at the root of the project with your favorite IDE—this tutorial uses Visual Studio 2022.

And then we have the following structure generated by the previous command. This is a standard minimal API framework in .NET 6.

Create the Microservice

The minimal API we created already contains everything we need to start implementing our microservice, which will have the following structure:

/Solution.sln

|

|---- UserManager.API.Default <-- Public API

| |---- Program.cs <-- Dependency injection

| |---- /Endpoints

| | |---- UserEndpoints.cs <-- API Endpoints

|---- UserManager.Application <-- Layer for exposure of repository and services

| |---- /Contracts <-- Contracts that are exposed to the customer

| | |---- /v1 <-- Version

| | | |---- /Users <-- Request and Response Classes

| | | | |---- /Response

| | | | | |---- GetUsersResponse.cs

| |---- /Services

| | |---- /v1 <-- Version

| | | |---- IUserConfigService <-- Interface of service

| | | |---- UserConfigService <-- Service class

In Visual Studio rename the project from “UserManager” to “UserManager.API.Default”. Then, right-click on the solution name and follow the following sequence:

Add --> New Project… --> Class Library --> Next --> (Put the name: “UserManager.Application”) --> Next --> .NET 6.0 --> Create

We created the layer for exposure of repository and services. Now we will create the contracts that are exposed to the customer. In the UserManager.Application project, create a new folder and rename it with “Contracts”.

Inside it, create the following structure of folders v1–> Users --> Response, and inside “Response” create a new class called “GetUsersResponse”, and replace the code generated in it with this:

This class has a list of users, which will contain the data received in the response to the request.

Now let’s create the service class which will contain the microservice’s business rules.

Still in the UserManager.Application project, create a new folder and rename it with “Services”. Inside it, create a folder called “v1”. Inside v1, create a new interface called “IUserConfigService” and replace the code generated in it with this:

And create a class called “UserConfigService” and replace the code generated in it with this:

You will need to install the “Newtonsoft.Json” library. You can do this through Visual Studio.

Explanation

First, we created an interface that will contain the main methods of the service. Next, we created the user service class, which will implement these methods.

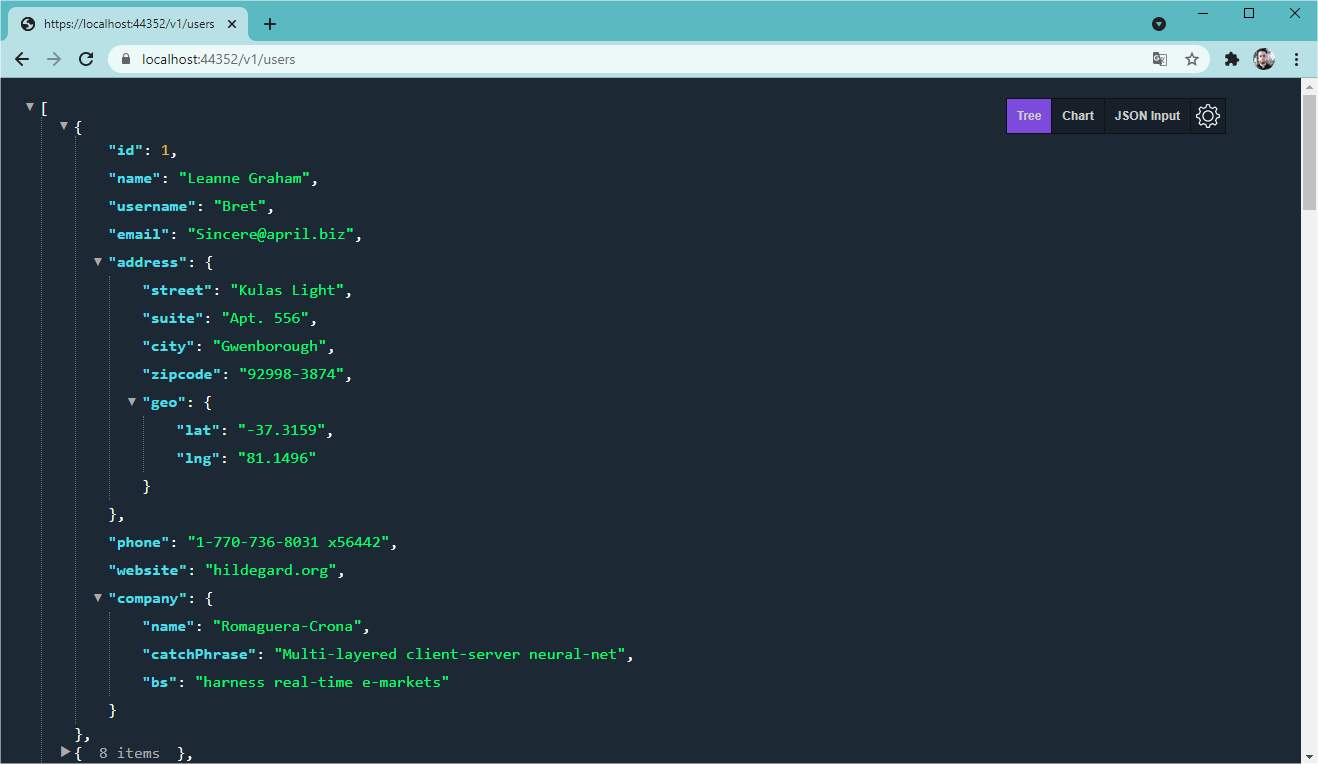

In the method “GetAllUsersAsync” our service will fetch a list of users from the site “jsonplaceholder.typicode.com”, which provides a free fake API for testing and prototyping. It will return a list of users. This process will be done through a request with the “HttpClient” class that provides methods of communication between APIs.

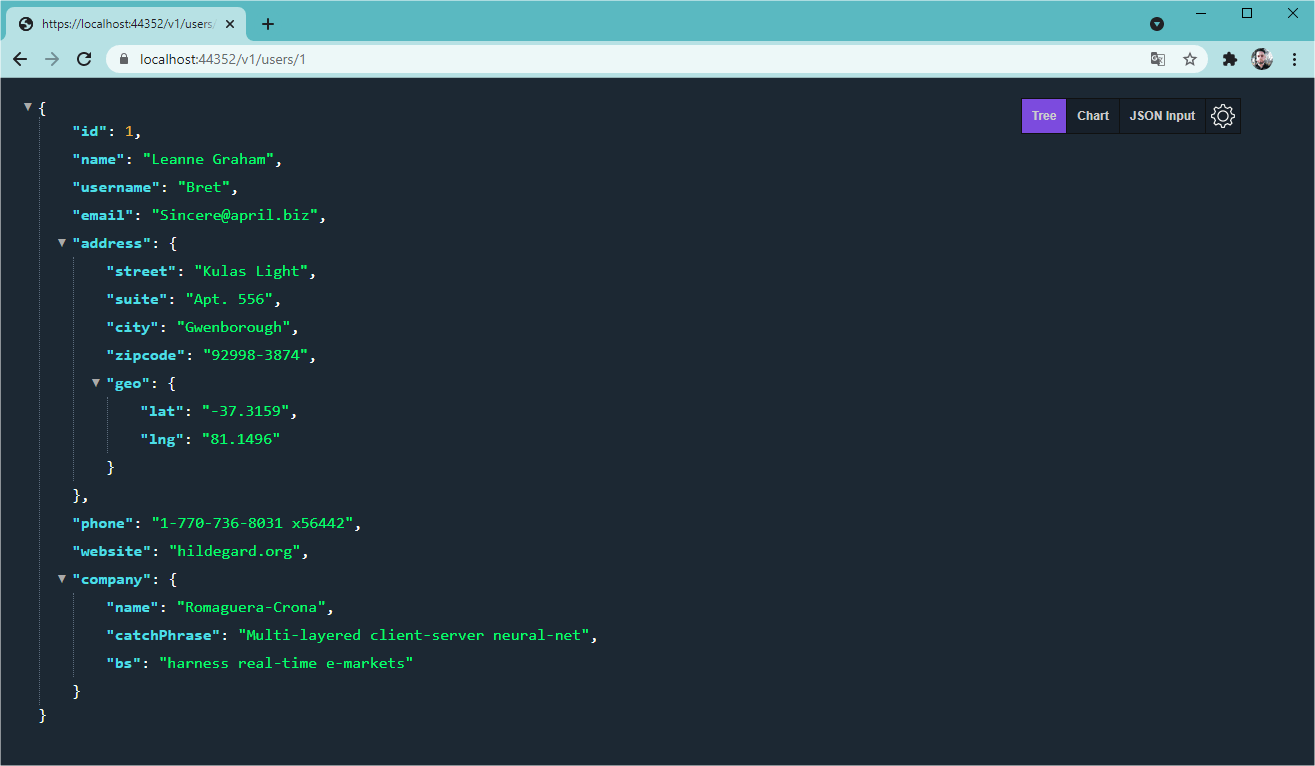

And in the “GetUserByIdAsync” method it performs a parameterized search, sending the user id in the request and returning the user data correspondent.

In both cases, the return from the API is converted into a list of users compatible with the record User of the contract.

Creating the Endpoints

Now we need to create the endpoints that will use the service methods. In .NET 6 we don’t need a “controller” anymore so we’ll create a class that will implement the endpoints.

So in the project “UserManager.API.Default” create a new folder called “Endpoints”, and inside create a class called “UserEndpoints”. Then replace the code generated in it with this:

You will need to add in the project “UserManager.API.Default” the dependency of the project “UserManager.Application”. This is easy, just right-click on the file “Dependencies” of the “UserManager.API.Default” project --> “Add Project Reference…” and choose the project “UserManager.Application”

Explanation

In the class above, we are creating the endpoints in the “MapUsersEndpoints” method.

The “AddUserServices” method will inject the dependency of the Service and its interface, and the other two methods using the service return the search result—if it is null, a “NotFound” status will be displayed in the response.

Now in the Program class, we will add the service and swagger settings. So, replace the code from the Program.cs file with the code below.

You will need to install the “Swashbuckle.AspNetCore” library. You can do this through Visual Studio.

And in the file “launchSettings.json” under the setting "launchBrowser": true, add this:

"launchUrl": "swagger"

There are two places, inside “profiles” and “IIS Express”.

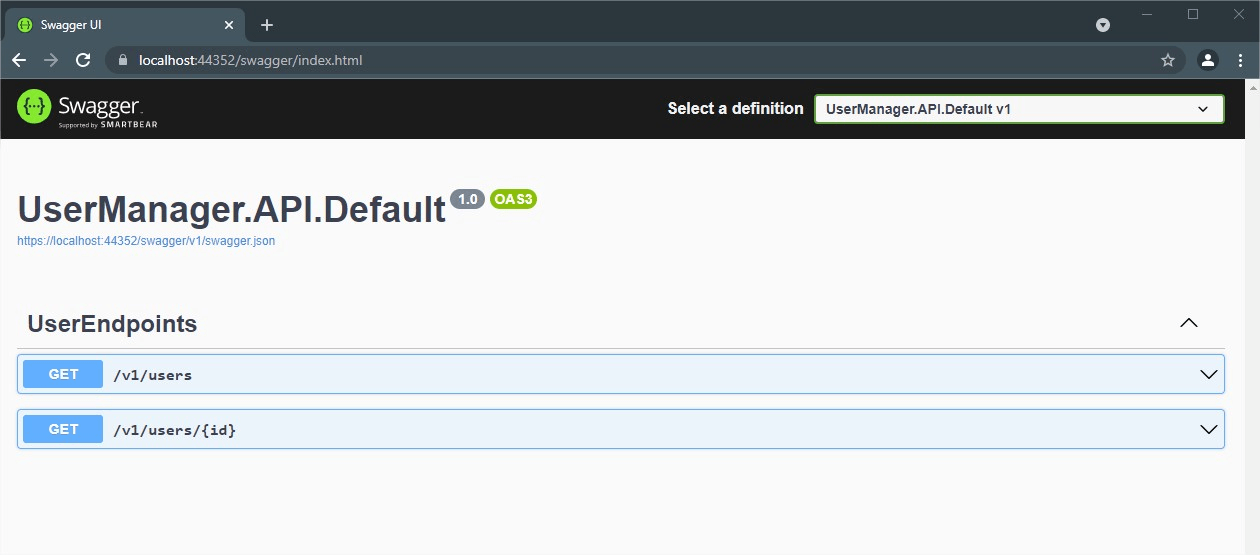

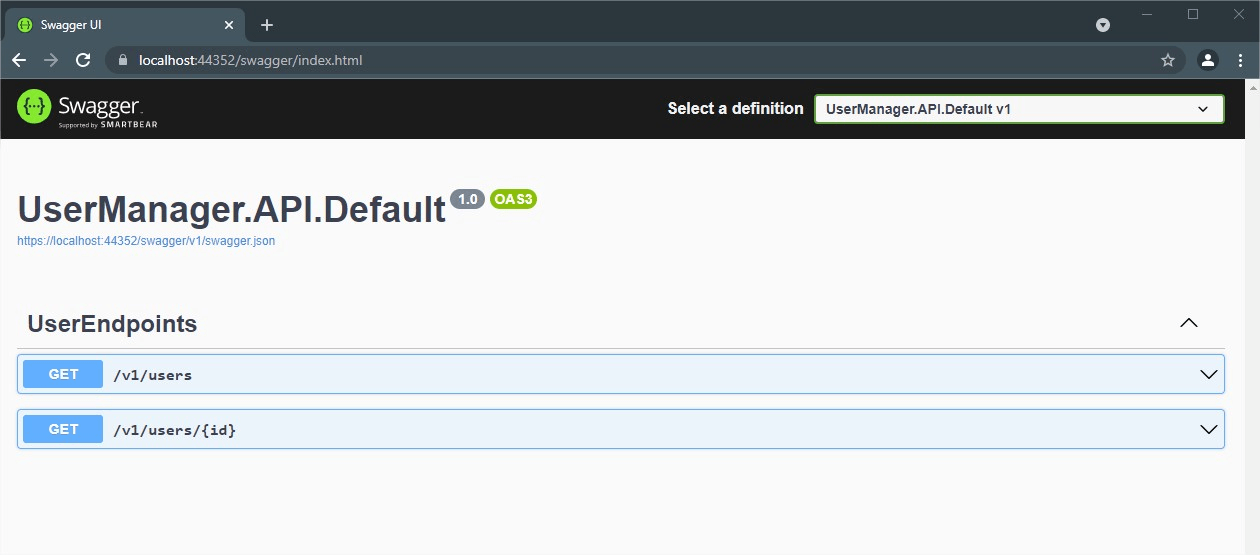

Finally, our microservice is ready to run. If you run it in Visual Studio using the “IIS Express” option, you will get the following result in your browser.

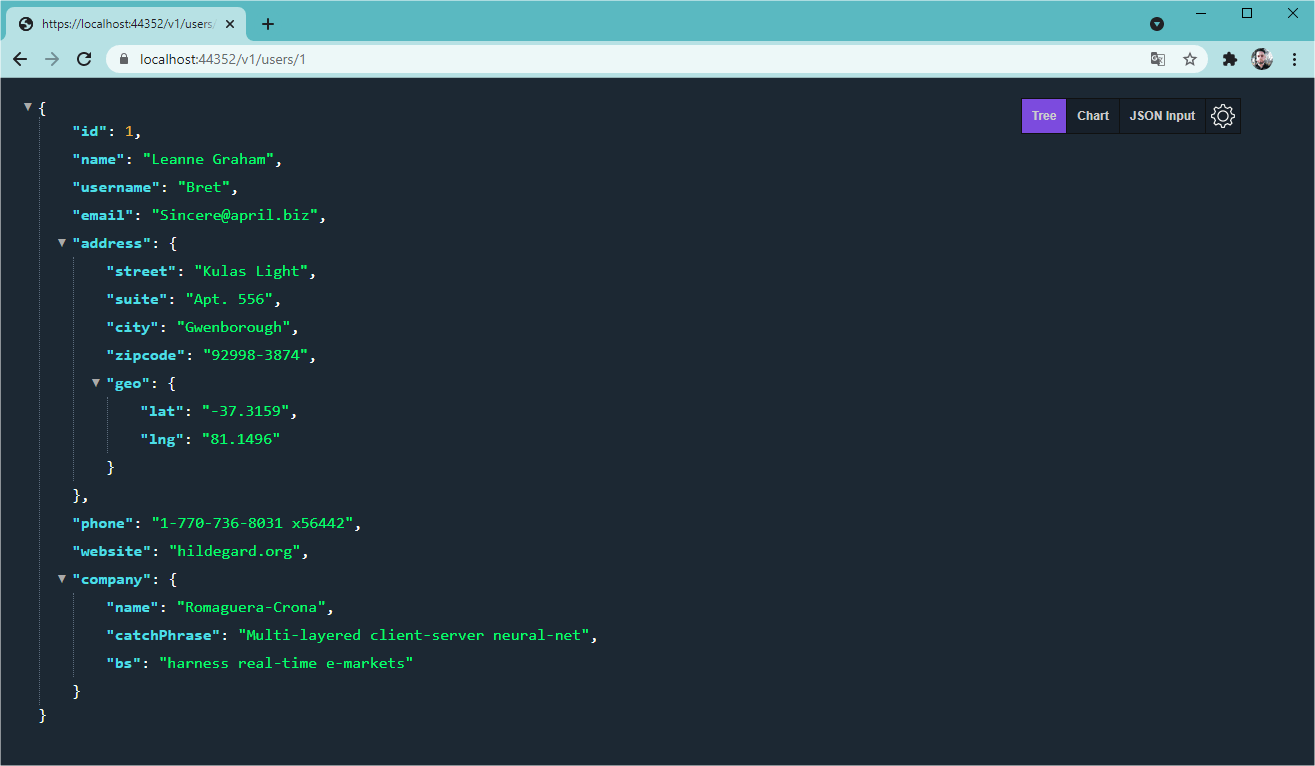

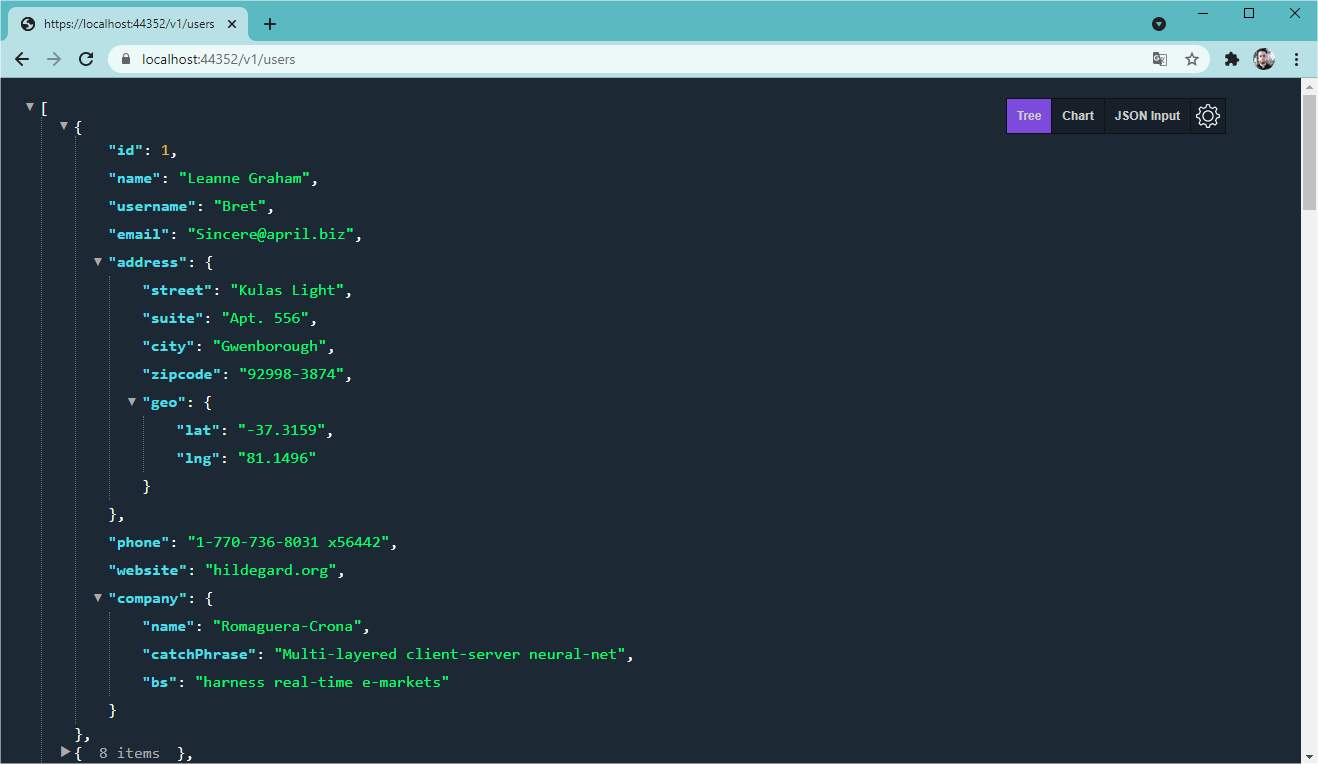

Now if you access the addresses https://localhost:<port number>/v1/users and https://localhost:<port number>/v1/users/1, you will get the following results:

Conclusion

In this article, we had an introduction to the topic “microservices” and we created a simple microservice in .NET 6 that communicates with another API and displays user data.

The possibilities when working with microservices are endless, so feel free to implement more functions in the project, such as creation and update methods, communication with a database, validations, unit tests and much more.