when building a GraphQL API, you want to model the API around a "viewer". The viewer is the user who is currently authenticated and making the request to the API. This approach is called the "Viewer Pattern" and used by many popular GraphQL APIs, such as Facebook/Meta, GitHub, and others.ps

We've recently had a Schema Design workshop with one of our clients and discussed how the Viewer Pattern could be leveraged in a federated GraphQL API. In this article, I want to share the insights from this workshop and explain the benefits of implementing the Viewer Pattern in a federated Graph.

What is the Viewer Pattern?

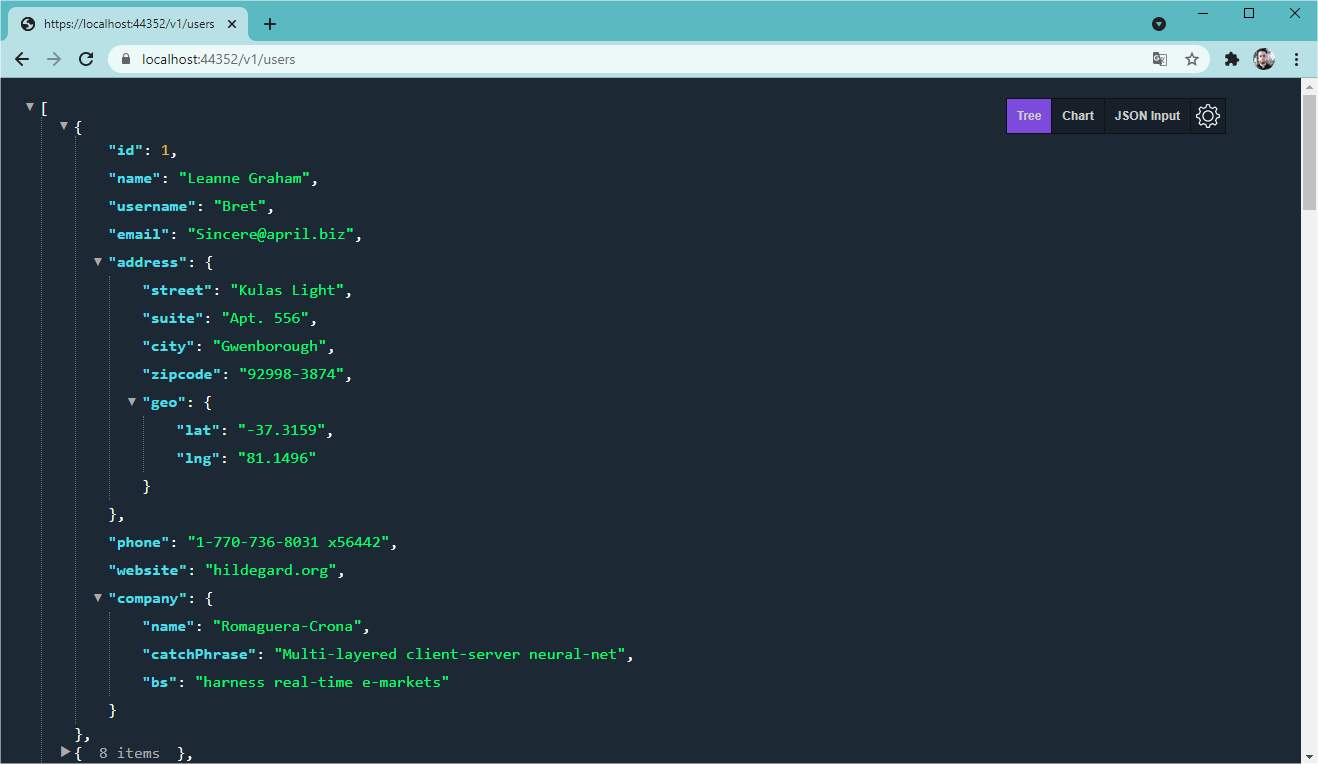

The Viewer Pattern usually adds a viewer, me, or user field to the root of the GraphQL schema. This field represents the currently authenticated user and can be used to fetch data that is specific to the user, such as their profile, settings, but also data that is related to the user, such as their posts, comments, or other resources.

Let's take a look at an example schema that uses the Viewer Pattern:

In this example, the currently logged-in user can fetch their own profile, id, name, email, and all the posts and comments they have created. If you're thinking in the context of a social media platform, it makes sense to model the API around the user, as we've always got an authenticated user and we usually want to fetch data that is related to them.

At the same time, we also have a user field that can be used to fetch data about other users, but it requires the user to have the admin role. Modeling our API like this makes it easy to define access control rules and to fetch data that is specific to the current user.

Let's say we'd like to fetch the currently logged-in user's profile, posts, and comments, this is how the query would look like:

In contrast, if we didn't use the Viewer Pattern, we would have to pass the user's ID as an argument to every field that fetches data related to the user, like their profile, posts, comments, and so on.

In this case, the GraphQL Schema would look like this:

In order to fetch the same data as in the previous example, we would have to write a query like this:

How the Viewer Pattern affects Authorization in GraphQL Schema Design

As we've seen in the previous section, the Viewer Pattern has a big impact on how we model access control rules in our GraphQL schema. By adding a viewer field to the root of the Schema, we have a single point where we unify authorization rules. All child fields of the viewer field can assume that the user is authenticated and have access to the user ID, email, and other user-specific data like roles, permissions, and so on.

In contrast, if we didn't use the Viewer Pattern like in the second example, we would have to spread authentication and access control rules across all root fields in our schema.

Extending the Viewer Pattern with multi-tenant support

Another use-case that we've came across is the need to support multi-tenancy in our GraphQL API. Let's assume we're building a Shop-System, and the goal of our API is to allow users to log into multiple shops and not just operate in the context of a user, but also in the context of a shop (tenant).

Let's modify our previous example to support multi-tenancy:

In this example, we've added a tenant field to the User type and the viewer field. This allows us to fetch data that is specific to the user and the shop they are currently logged into.

Depending on the Shop-Site the user is currently logged into, the viewer field will return different data. We've also retained the user field, but extended it with a tenant argument as well to support multi-tenancy.

We could also slightly modify this approach to reduce duplication of the tenant argument by creating a Tenant type and adding it to the viewer field:

The upside of this approach is that we've got rid of the duplicate tenant argument in the viewer and user fields. The downside is that we've introduced an additional level of nesting in our schema. Depending on the number of root fields that actually depend on the tenant argument, this might simply introduce unnecessary complexity to our schema. I think the previous example is a good compromise between simplicity and multi-tenancy support.

Implementing the Viewer Pattern in a Federated GraphQL API

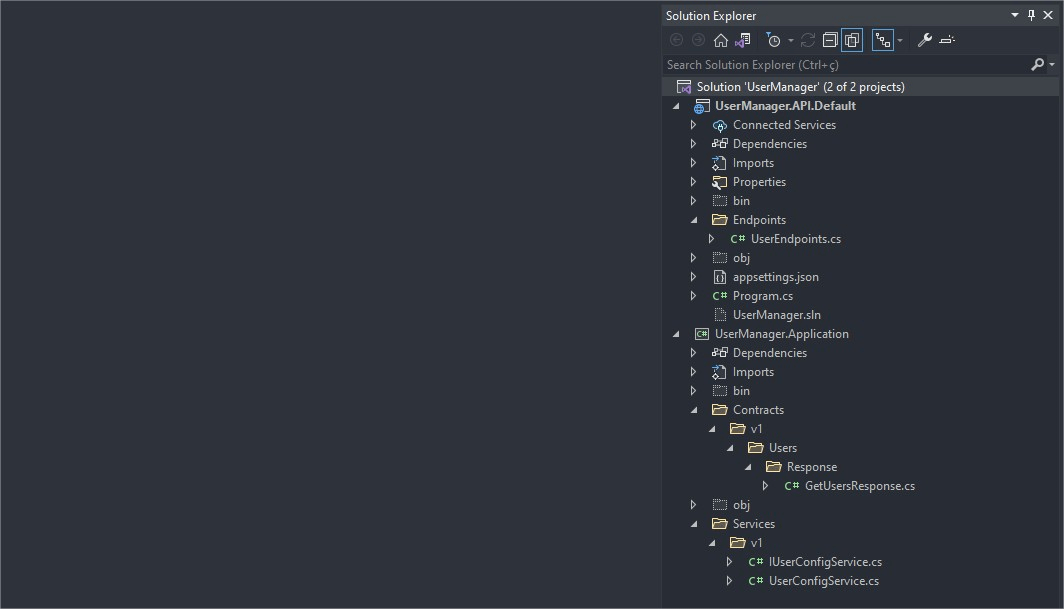

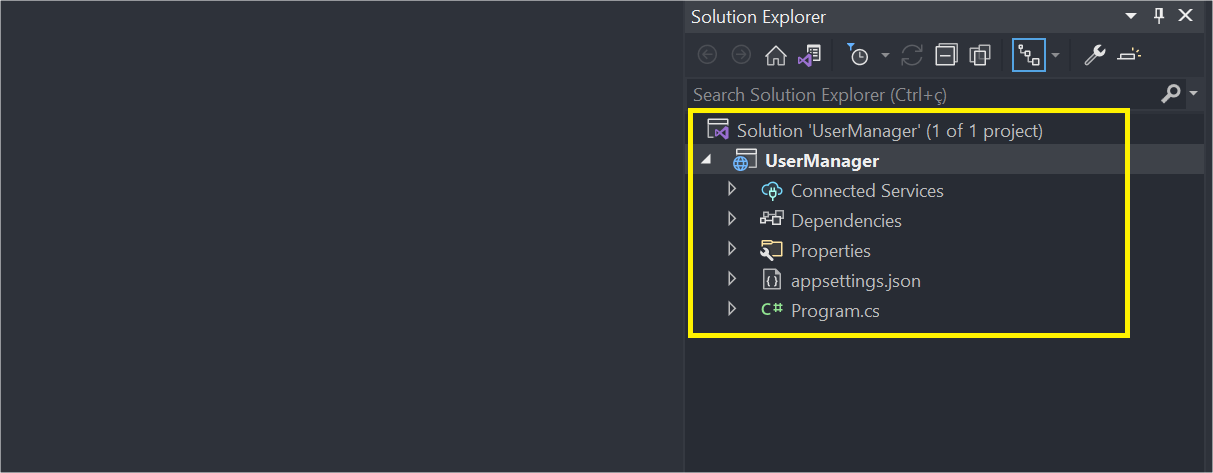

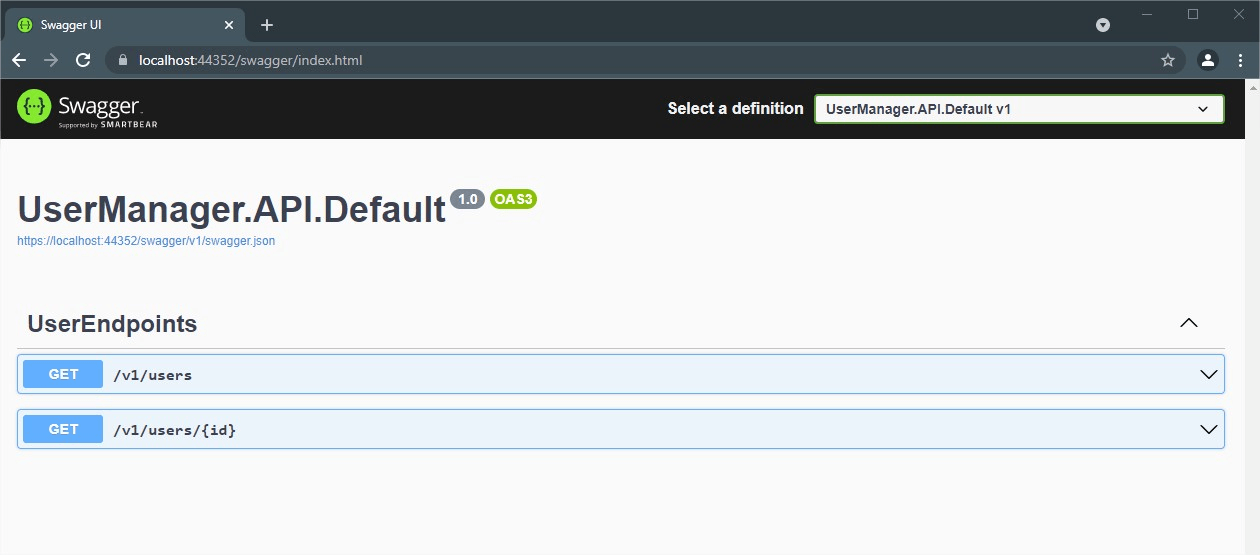

Now that we've seen how the Viewer Pattern can be used to model a GraphQL API, let's take a look at how we can implement it in a federated GraphQL API.

Let's assume we've got a federated GraphQL API that consists of multiple services, one service responsible for the user data (Users Subgraph), another service responsible for the shopping cart (Shopping Subgraph).

As the Shopping Subgraph depends on the user information to fetch the associated shopping cart, we need to make sure that these fields are "arguments" to the User type in the Shopping Subgraph. The way we can model this is by using the @key directive to mark certain fields as "Entity Keys" in our Subgraphs.

You can think of the @key directive in a federated GraphQL API as a way to tell the Router Service what "foreign keys" are required to "join" the data from different Subgraphs. Federation, in a way, is like a distributed SQL database, where each Subgraph is a table and the Router Service is the central component that knows how to join the data from different tables.

Let's take a look at how we would model the User and Shopping Subgraph to support the Viewer Pattern:

In this example, we've added the @key directive to the User type in both the Users and Shopping Subgraph. This tells the Router that we need the id, tenant, and email fields to join additional data on the User type from different Subgraphs.

If we compose the federated schema from these two Subgraphs, we'd end up with a federated schema that looks like this:

Let's take a look at how we would fetch the currently logged-in user's profile, cart, and cart items:

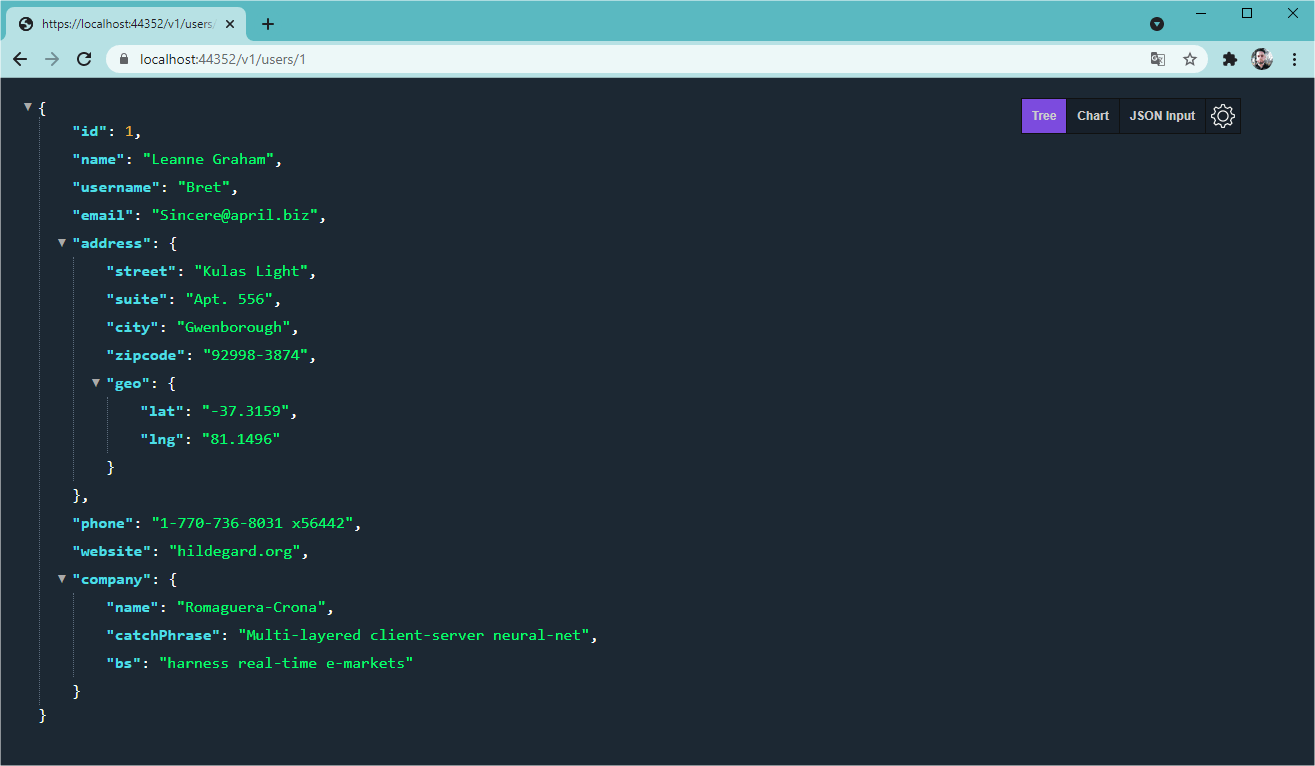

Let's assume the Users Subgraph returs the following data:

The Router would now make the following request to the Shopping Subgraph:

Note how we're passing the "Viewer Information" to the Shopping Subgraph in the representations variable.

We haven't just structured our GraphQL Schema around the Viewer Pattern, but we've also established a standardised way for Subgraphs to fetch data that is specific to the currently logged-in user.

There's no need to pass the user's ID, tenant, or email as arguments to every field or to pass them as headers in the HTTP request, which would require the Subgraph to use a custom authentication middleware to extract the user's information and attach it to the request context.

How the Viewer Pattern simplifies the implementation of Subgraphs & Federated GraphQL APIs

If we're building a distributed system, it's quite likely that this system spans across multiple teams. Each team is responsible for implementing and maintaining their Subgraphs. It's possible that different teams use different programming languages, frameworks, and tools to build their Subgraphs. As such, it's important to establish standards, best practices, and patterns that make it easy for teams to build Subgraphs that work well together.

In an ideal world, we don't require all teams to implement a custom authentication middleware to extract the user's information from the request and attach it to the request context. We also don't want to require all teams to spread access control rules across all fields in their schema. We'd like to keep the implementation of Subgraphs as simple as possible.

By using the Viewer Pattern, we've established a standardised way for teams to add fields to the composed federated schema. In a Federated GraphQL API leveraging the Viewer Pattern, you can understand the User type as the "entry point" to the Subgraph.

Just as an example, let's add another Subgraph that adds purchase history to the User type:

As you can see, the User type with the 3 fields id, tenant, and email as well as the @key directive is the "entry point" to the Subgraph. We can use this pattern as a blueprint for all Subgraphs.

We don't have to worry about how the user's information is extracted from the request, and all Subgraphs can follow the same pattern that's natively supported by GraphQL, as we're simply reading the representations variable from the request.

Conclusion

The Viewer Pattern is a powerful way to model GraphQL APIs around a "viewer". It's not just a pattern that simplifies to model the API around the currently logged-in user, but it also makes the implementation of Subgraphs in a federated GraphQL API much simpler.

By using the Viewer Pattern, we're able to establish a standardised way for Subgraphs to define an "entry point" to their Subgraph, we've simplified authentication and have all the information natively available in the request to implement authorization rules in our resolvers.